Publish or Perish: What Next?

- Laras Fadillah

- Oct 1, 2025

- 3 min read

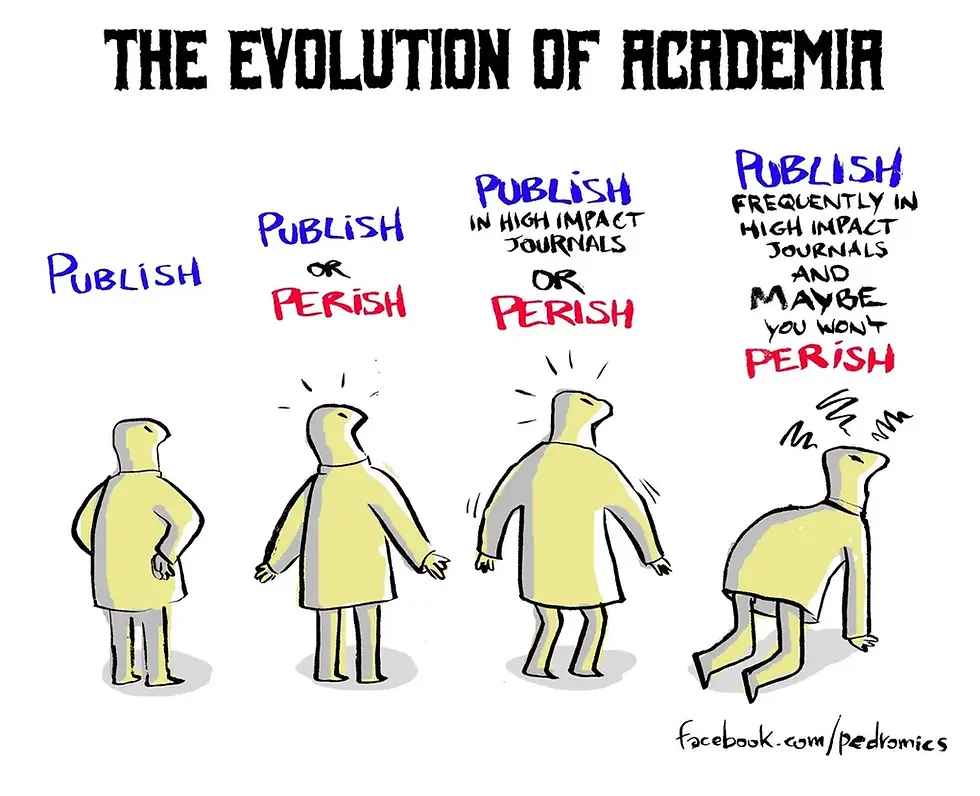

I saw this cartoon and thought: yep, that's academia in one picture now.

From publish --> publish or perish --> publish in high-impact journals --> publish frequently in high-impact journals, and maybe you won't perish.

It's funny… but it's also painfully true.

When I started my career in battery science, I thought research was simple: design experiments, collect data, share results. But the reality is far more complex.

Today, there's enormous pressure to publish, and to publish fast. Journals want "record-breaking" numbers. Funders want breakthroughs. Careers often depend more on the length of your publication list than the depth of your insights.

That's why we see a flood of papers every week claiming ultra-high energy density, thousands of stable cycles, or magical new electrolytes. The graphs look beautiful. The numbers are impressive.

But here's the problem:

Most of those results are measured in PEEK test cells or coin-type lab cell systems designed for research convenience, not industrial reality. They often don't reflect the stresses of pouch or cylindrical cells that companies actually need to manufacture. A material that looks stable in a PEEK cell may degrade rapidly when scaled. Interfaces that seem perfect in the lab may fail under real-world humidity, pressure, or cycling conditions.

Truth looks different. It's incremental. It's learning why lithium plates unevenly on copper. It's documenting how aluminum collectors corrode at high voltage. It's admitting that sulfide electrolytes need careful interface engineering before they can be practical.

These findings may not win headlines, but they are the results that actually move technology forward. Because at the end of the day, industry doesn't care about beautiful figures. Industry cares about batteries that work safely, reliably, and at scale.

And here's the hardest part: I'm not sure how we can change our academic environment right now. Even for researchers inside the system, it's confusing to know which publications are truly reliable and worth building on, and which ones are just "too good to be true." Repeating something that never actually works wastes huge amounts of time, energy, and funding. Worse, it creates a ripple effect that slows down both academia and industry.

But if the system is broken, what should replace it?

Some ideas that researchers (and even journals) are starting to discuss:

Reproducibility over novelty: Science isn't progress if others can't replicate it. We need more credit for validation, not just flashy firsts.

Mentorship and teaching impact: A great researcher who builds up students and early-career scientists creates far more long-term value than a single "record-breaking" paper.

Collaboration and open science: Instead of siloed breakthroughs, reward projects that share data, methods, and tools for the community.

Industry & societal impact: Papers are important, but so is translating research into solutions; cleaner batteries, better healthcare, sustainable materials.

Yesterday I read someone suggest: what if PIs were limited to publishing only one paper per year? Imagine how that would shift the focus: from quantity to clarity, reproducibility, and impact. Maybe the work we publish would matter more, and waste less.

Of course, journals and institutions still measure us by metrics and impact factors. But if the only goal is "publish or perish", we waste energy chasing what looks impressive rather than what moves the field forward.

Good science doesn't stop at discovery; it survives in the real world.

Comments